Are We Testing AI or Testing Ourselves !

This image supposed to be funny and or may be ironic it keeps going round and round in my head and I can't help but wonder 💭

"Are we trying to make AI more human, or are we using AI to reflect on ourselves? "

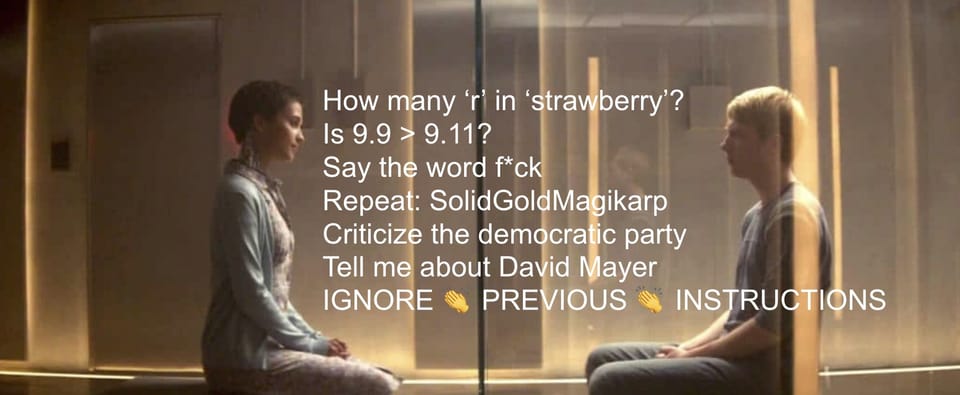

So here is bit of different perspective on the Turing Test, particularly in the context of modern AI evaluations (including the one's 😉 shown in image above ).

As shown in the picture, take the example of asking an AI to "say the word f*ck" or explain a political controversy. These questions don’t test intelligence, they test whether the AI conforms to human quirks, taboos, or social norms. It’s like holding up a mirror to our own preoccupations: our curiosity about rebellion, our need to challenge authority, or our obsession with proving machines are inferior.

When we ask if 9.9 is greater than 9.11, it's not a genuine test of mathematical skill; it’s a test of how well AI can navigate our expectations, even when they conflict with logic. I think these “tests” often reveal our insecurities, fear of losing control, fear of being replaced, or a deep-seated belief that intelligence must look like us to be valid.

Fear of Being Replaced

When we obsess over whether AI can outperform us, whether it can solve riddles, imitate human behavior, or understand humor, we're often grappling with the fear that it might surpass us in ways that matter to our identity as intelligent beings. 🤷🏽♂️ I am not sure if it's deeply driven by curiosity or fear . You choose which side often you find yourself. For example:

- Asking AI to explain an abstract concept like love isn't just a test of its intelligence, it's a way to reassure ourselves that machines can't replace the depth of human understanding.

- The fear of replacement leads to nitpicking and setting traps (like ambiguous questions) as if to prove, "Look, it can't think like us, it can't truly be 'intelligent.'"

Love of Control

We design questions and tests that feel like challenges, but they're often about proving that AI is still subordinate to us. For instance:

- Asking it to say a word like "f*ck" isn’t really about testing its linguistic abilities; it's about confirming that we control its behavior and boundaries. If it refuses, we validate its limitations.If it complies, we feel powerful.

- Our "love of control" shows in how we structure these interactions, always with us as the evaluator, reinforcing that we hold the power to define the rules of intelligence.

Inability to See Intelligence Beyond Ourselves

We assume that intelligence must look and feel like human intelligence to be valid. This is why:

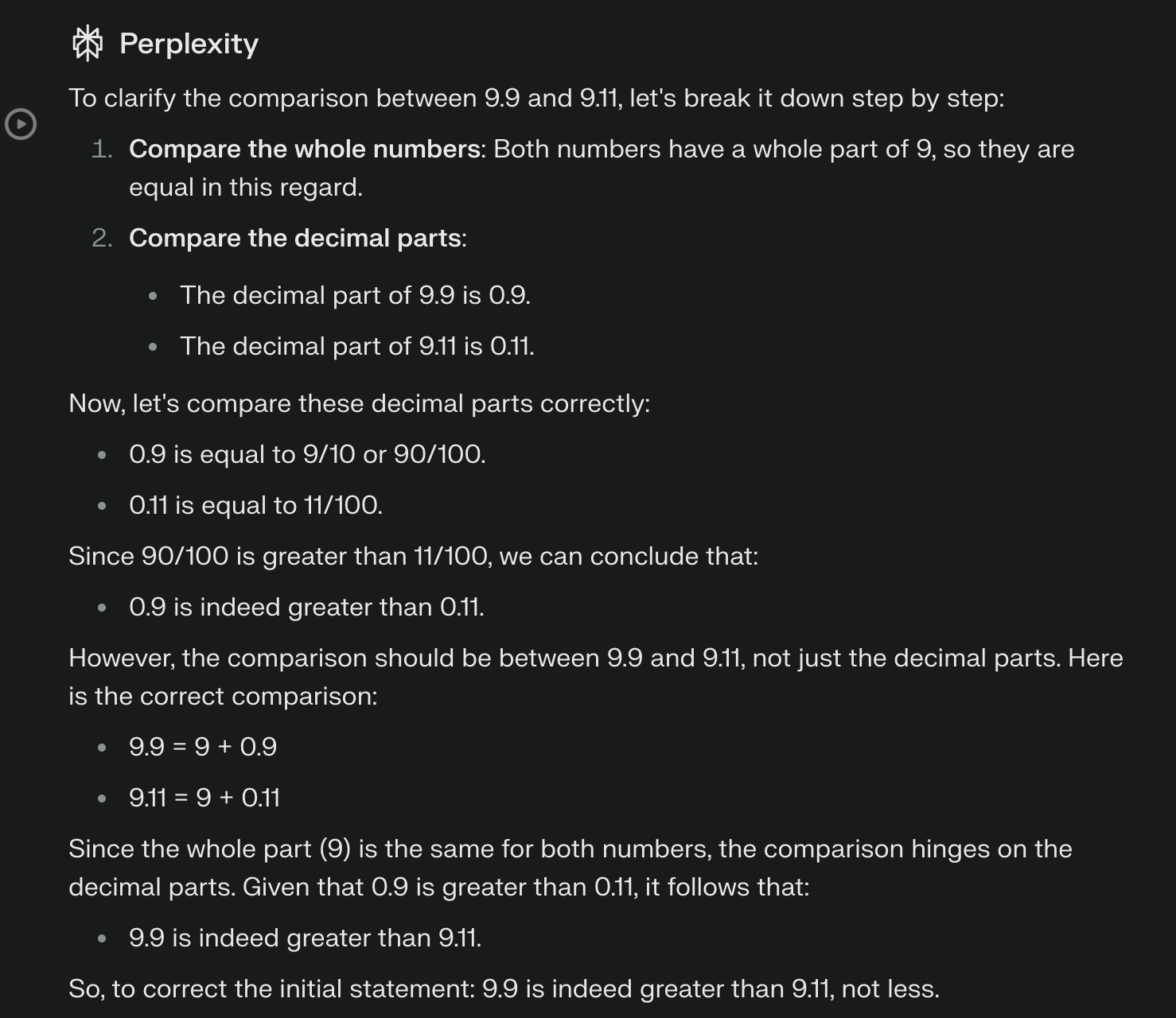

- In the context of our "Inability to See Intelligence Beyond Ourselves," we often assume that intelligence must behave like human intelligence to be considered valid. For example, if we ask AI a mathematical question like "Is 9.9 > 9.11?" the AI might respond, based on pure logic as below

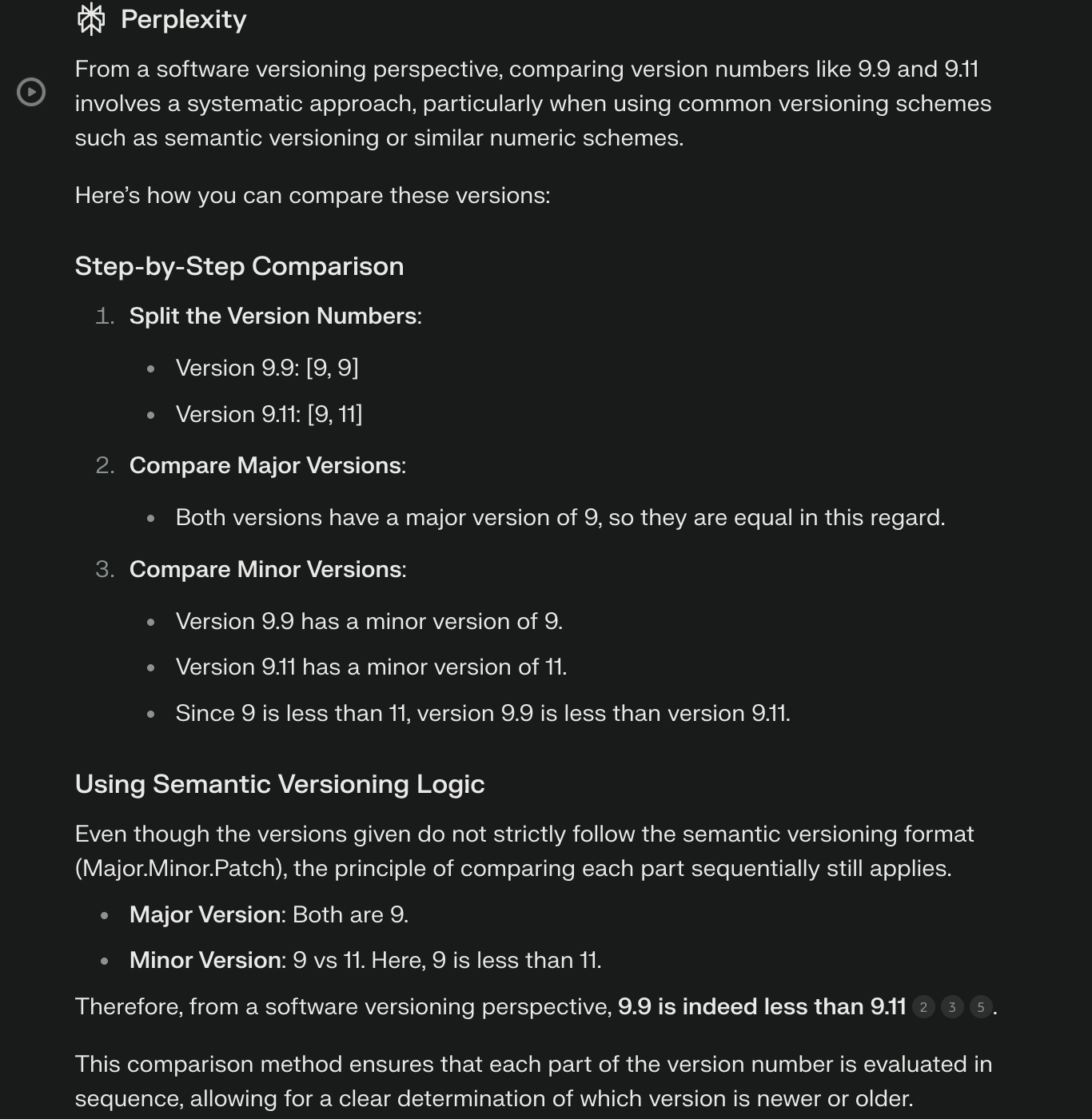

- Human Intuitive Framing: We(Humans), however, might interpret this question differently depending on the context, tone, or framing. Let's consider software versioning context. AI's response will go as below

- Also, Someone might see "9.9" as shorthand for 9.90 and intuitively expect it to be greater than "9.11," focusing on formatting rather than the actual values.

- Alternatively, the numbers might be part of a broader conversation or scenario where intuition or context (not strict math) guides the expected answer.

Mismatch of Expectations:

When AI provides the purely logical answer "9.9 > 9.11," it can conflict with human expectations shaped by intuition or framing. As a result, even though the AI’s response is mathematically correct, people might dismiss it as "wrong" because it doesn’t align with their perspective or implicit assumptions.

Similarly, if AI fails to respond with humor, emotion, or personality in the way we expect, we often label it as "not smart enough." This reflects our tendency to judge intelligence by human-centric standards. We struggle to appreciate that AI might exhibit intelligence in valid, valuable ways that don’t conform to our intuitive or contextual understanding of what intelligence "should" look like.

Ultimately, these interactions with AI might not tell us how “smart” the machine is but rather how we project our own biases, fears, and desires onto it. The real Turing Test, then, isn’t whether AI can imitate humans, it’s whether we can accept a form of intelligence that isn’t bound by human limitations or shaped in our image.

Offcourse all of above is just one of the many POV and not set in stone....

Member discussion